Jenkins Pipeline is a suite of plugins which supports implementing and integrating continuous delivery pipelines into Jenkins.

I will be using this to deploy a Mule application to Anypoint Platform Runtime Manager and store the delivery in Artifactory.

My setup is using Docker for Jenkins and Artifactory, and the source is stored in GitLab.

Jenkins setup

For detailed instructions please refer to Official Jenkins Docker image.

-

Download and start a Jenkins Docker image.

docker run -p 8080:8080 -p 50000:50000 -v jenkins_home:/var/jenkins_home jenkins/jenkins:lts -

When this is done, follow the instructions in the console, for the admin login.

-

Next, I will install the required plugins from Manager Jenkins, Manager Plugins and install the Artifactory Plugin and Config File Provider Plugin

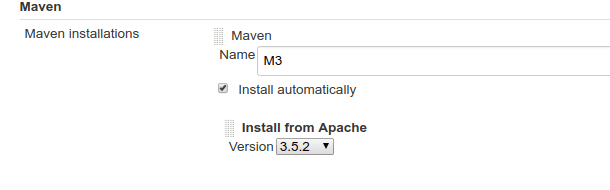

I’m using Maven to do the build and deployment, so under Global Tool Configuration we add a Maven Installer with the name M3 which is installed automatically.

-

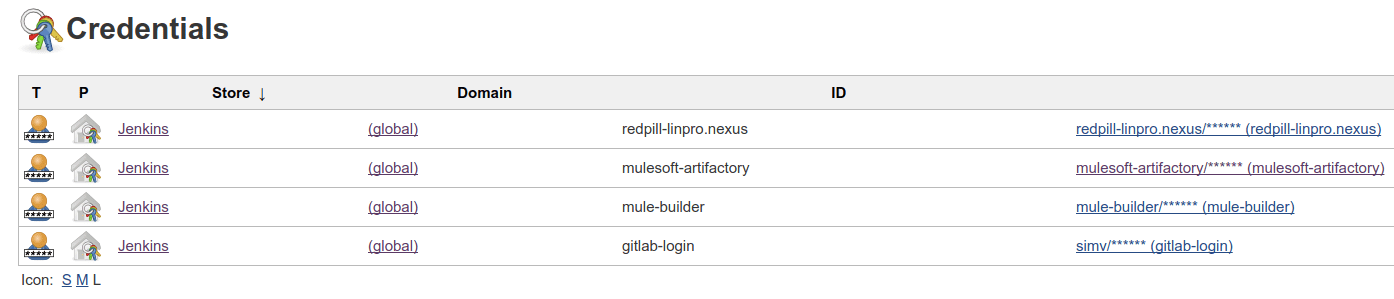

To not have credentials stored in clear-text in our configuration files and in our source control use the Jenkins Credentials to set them up.

We need a setup for the Mule Nexus repository, our GitLab Account, our Anypoint Platform Account and the Artifactory.

-

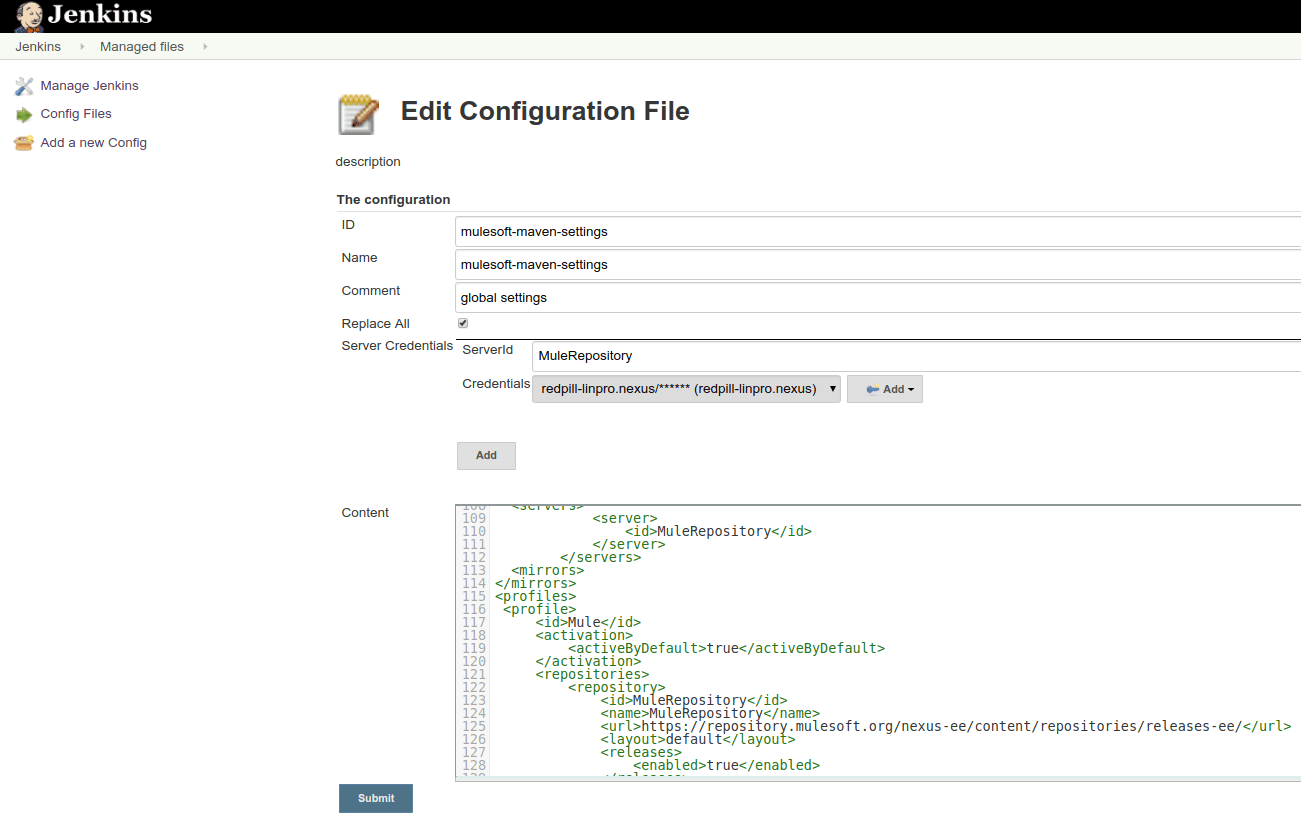

Next, I’m adding a mulesoft-maven-settings with the Config File Provider Plugin so we have access to the enterprise repository, and because we don’t want to store credentials in clear text, select the Server credentials for Nexus.

Artifactory Setup

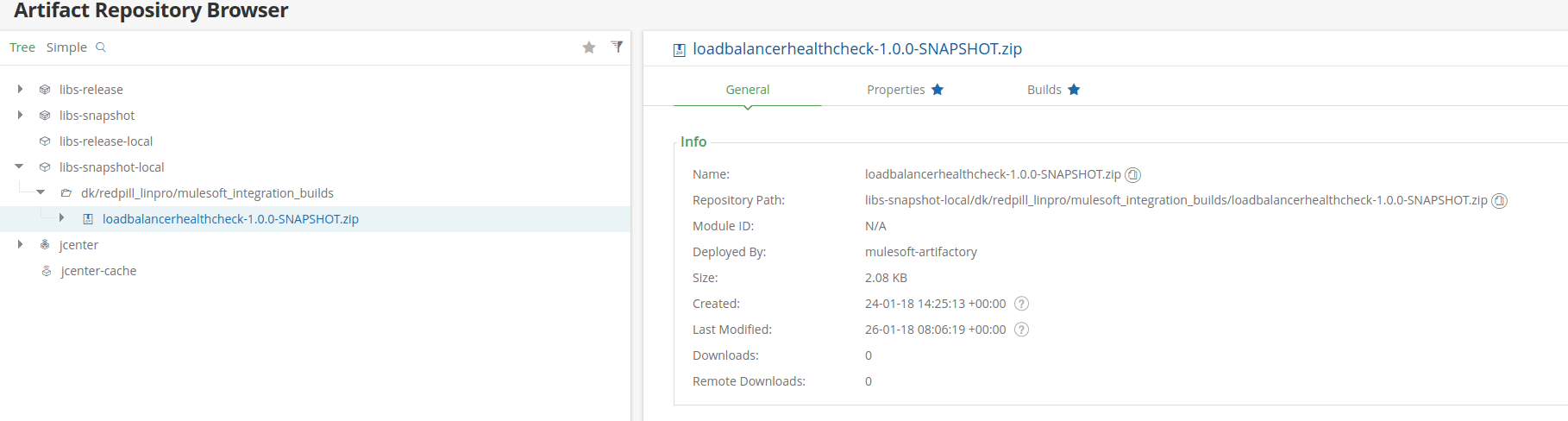

Anypoint Platform Runtime Manager only stores the latest version of our deployment, so to keep a history of our builds we are using Artifactory to store it.

For this setup, I’m not using Artifactory to manage the repositories set in settings.xml, but I would recommend that if possible.

For detailed instructions please refer to Installing with Docker

-

Download and start an Artifactory Docker image.

docker run --name artifactory -d -p 8081:8081 docker.bintray.io/jfrog/artifactory-oss:latest -

Afterwards add the same credentials we configured in Jenkins, and give write permissions to e.g libs-snapshot-local

Jenkins-file Setup

In my project, I have created a Jenkins-file which contains the build steps and knows about the mulesoft-maven-settings and credentials. It should be possible to define the Artifactory server in Jenkins and only reference it here, but I ran into some issues so here I will just reference it directly.

pipeline {

agent any

environment {

def mvn_version = 'M3'

def uploadSpec = """{

"files": [

{

"pattern": "target/*.zip",

"target": "libs-snapshot-local/dk/redpill_linpro/mulesoft_integration_builds/"

}

]

}"""

}

stages {

stage('Build') {

steps {

configFileProvider(

[configFile(fileId: 'mulesoft-maven-settings', variable: 'MAVEN_SETTINGS')]) {

withEnv( ["PATH+MAVEN=${tool mvn_version}/bin"] ) {

sh 'mvn -s $MAVEN_SETTINGS clean package -DskipTests=true'

}

}

}

}

stage('Deploy Test') {

steps {

configFileProvider(

[configFile(fileId: 'mulesoft-maven-settings', variable: 'MAVEN_SETTINGS')]) {

withEnv( ["PATH+MAVEN=${tool mvn_version}/bin"] ) {

withCredentials([[$class: 'UsernamePasswordMultiBinding', credentialsId: 'mule-builder', usernameVariable: 'USERNAME', passwordVariable: 'PASSWORD']]) {

sh 'mvn -s $MAVEN_SETTINGS -Ddeploy.username=$USERNAME -Ddeploy.password=$PASSWORD deploy -DskipTests=true'

}

}

}

}

}

stage('Upload Artifact') {

steps {

withEnv( ["PATH+MAVEN=${tool mvn_version}/bin"] ) {

script {

def server = Artifactory.newServer url: 'http://<artifactory url>:8081/artifactory', credentialsId: 'mulesoft-artifactory'

server.bypassProxy = true

def buildInfo = server.upload spec: uploadSpec

}

}

}

}

}

}

Maven Setup

The maven pom file needs to know how it will do the deployment, and the Maven deployment plugin has been disabled to not cause errors.

<plugin>

<groupId>org.mule.tools.maven</groupId>

<artifactId>mule-maven-plugin</artifactId>

<version>2.2.1</version>

<configuration>

<deploymentType>arm</deploymentType>

<username>${deploy.username}</username>

<password>${deploy.password}</password>

<target>kubernetes</target> <!--group or server name to deploy to -->

<targetType>serverGroup</targetType> <!-- One of: server, serverGroup, cluster -->

<applicationName>lbhealthcheck</applicationName>

<environment>Dev</environment>

<businessGroup>Redpill-Linpro Group</businessGroup>

<url>https://anypoint.mulesoft.com</url>

<redeploy>true</redeploy>

</configuration>

<executions>

<execution>

<id>deploy</id>

<phase>deploy</phase>

<goals>

<goal>deploy</goal>

</goals>

</execution>

</executions>

</plugin>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-deploy-plugin</artifactId>

<configuration>

<skip>true</skip>

</configuration>

</plugin>

Adding a Job to Jenkins

-

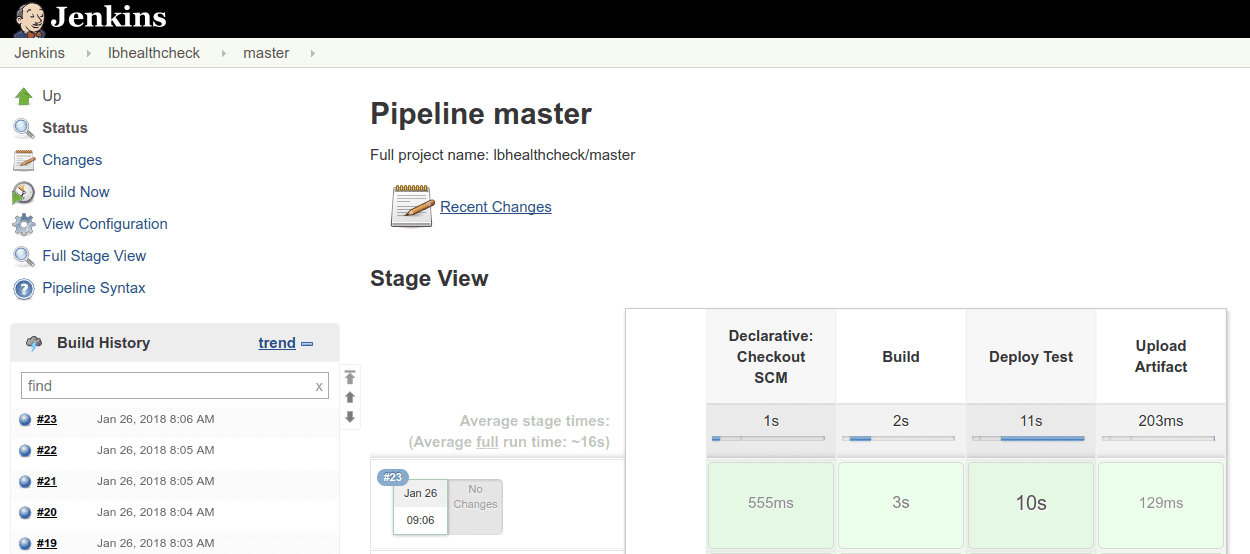

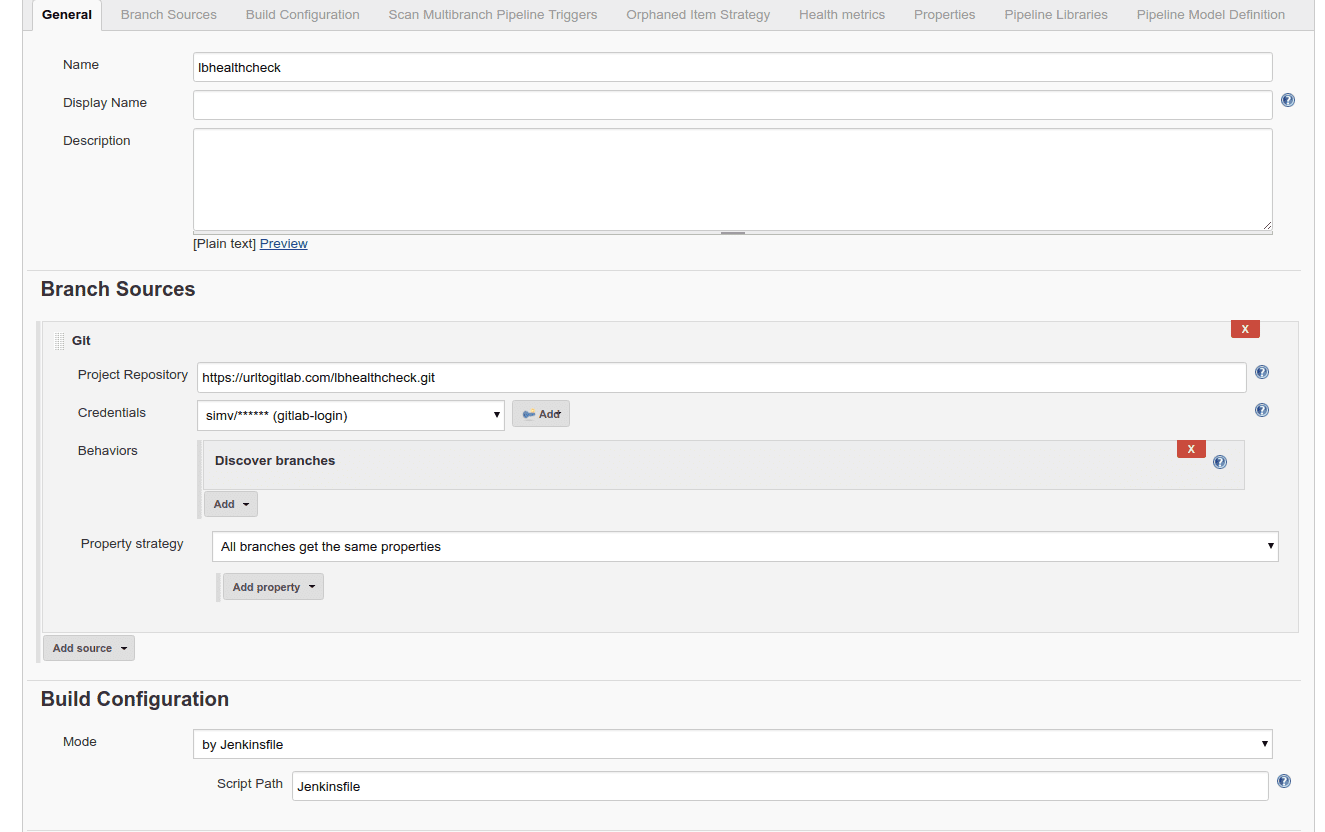

When everything is set up we can now add a pipeline to Jenkins. I will use the multi-branch pipeline and create a job that points to my GitLab from where it reads the Jenkins-file.

-

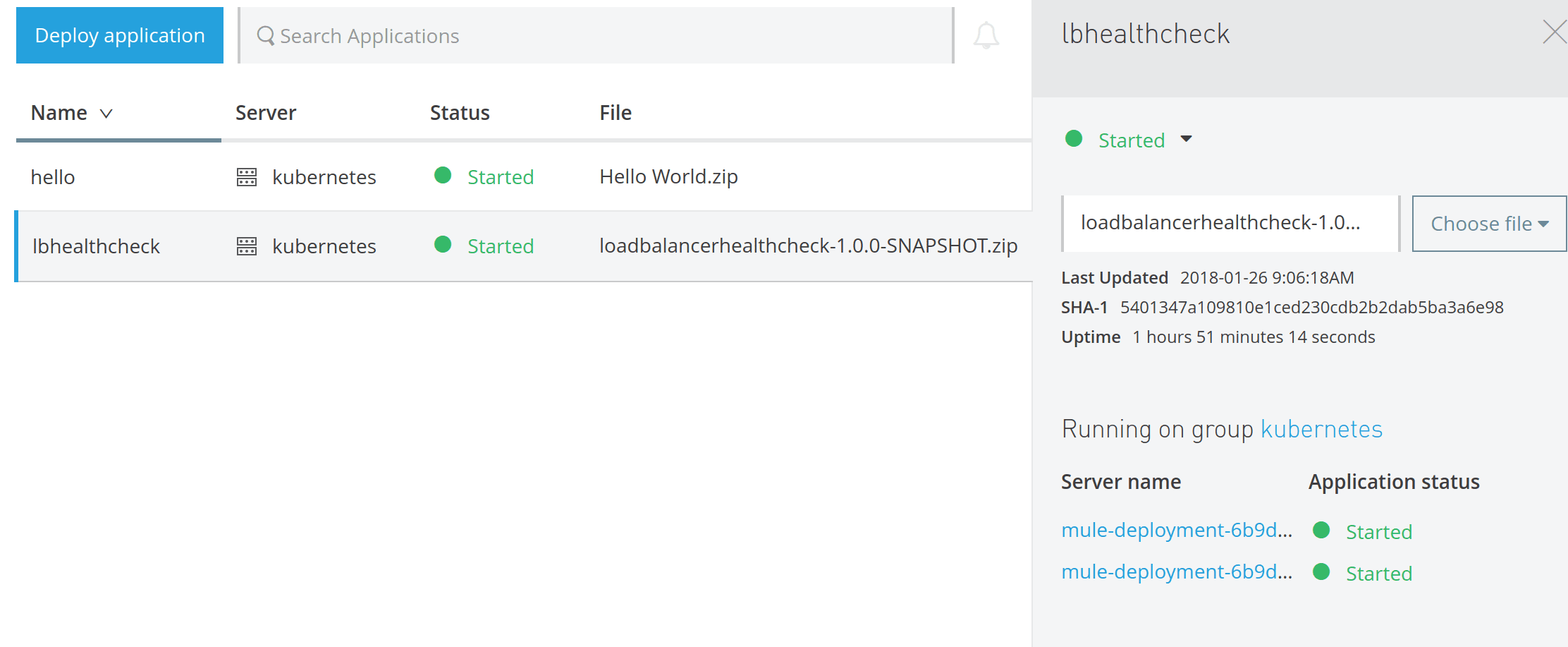

Afterwards, we are able to build and if everything is correct we should see it deployed to Runtime Manager and have the zip in Artifactory.