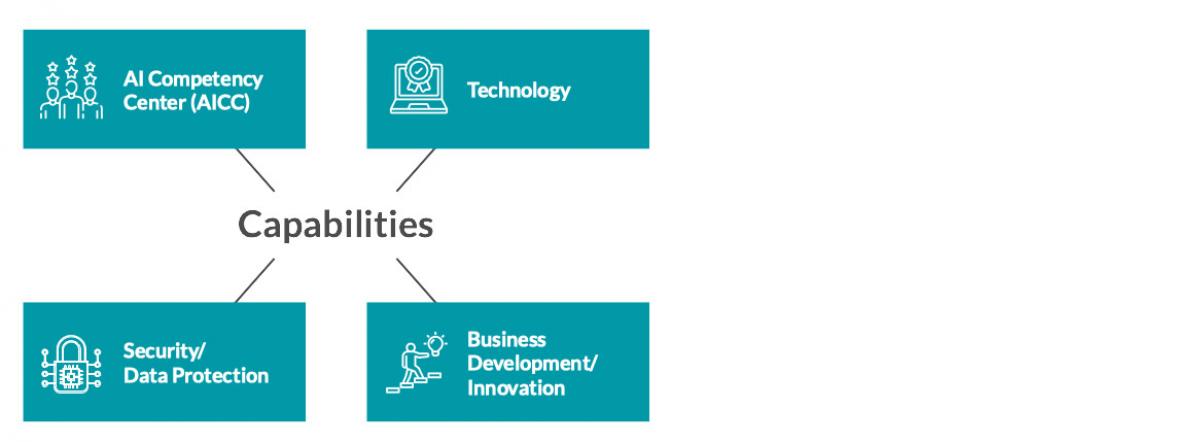

In this AI Ready blog post we will address the capability of ”Security & Data Protection”. We have decided to list this as a capability since we believe this is an important consideration when embarking on an AI initiative and we think this should be part of the collective knowledge available to all initiatives.

These can be complex and difficult considerations for a specific project. This is why we think ”Security & Data Protection” concerns should be made part of the commonly available capabilities to support AI initiatives.

So which are the concerns and decisions that need to be made and what risks are there with AI – Security & Data Protection?

Security concerns to address

First of all one need to address the security concern when implementing AI tech. As digitization flourishes, one needs to add needed considerations, a decent amount of common sense and also formal regulations that apply. In the EU we already have regulations such as GDPR, Cyber resilience, NIS2 and the AI Act among others. All these regulations and considerations are designed to make sure that digital solutions (AI among them) systems are safe, transparent, traceable, non-discriminatory and environmentally friendly.

AI systems should be overseen by people, rather than by automation, to prevent harmful outcomes. These are the overarching regulations and considerations we need to assure that we comply with for the tooling we have chosen to support our initiatives.

Ideally we have already established the Technology we wish to use as a capability (see previous blog post/chapter), so that we can test these considerations against the technology and models we intend to use. These two capabilities naturally relates to each other and needs to be synced.

Worst case scenario

GDPR and privacy protection in particular is a regulation that comes into play when we start to share information/data with AI models. Most AI models today are run on one of the available cloud global scaler platforms and their AI engines. Lawyers have raised concerns about sharing personal data with these services, as it is hard to control who accesses the information and how these models combine the data provided to them.

For instance one piece of personal information (like name, street address, email address or something else) might be shared with an AI model and if that information then accidentally (or deliberately by the model) is matched with medical data, or any other secrets made available to the model from another source, we will have a serious breach of personal integrity.

Worst thing is that it might be hard to detect, as (worst case scenario) the information I share is matched and displayed to someone else using the same model. This might be a real ”corner case” and there should be protective measures in place within the models to predict this from happening, but we have chosen to describe this scenario in this blog post as just an example of things that you need to be vary of when using AI models and tech.

Available tooling

There are also AI models and tooling available that can be installed on prem or in regional cloud services (hosted and managed within EU borders). With the on prem option, there is often an issue with finding enough resources (computing power to run the models) and the number of models available are also limited. The number of models available and the computing power of regional cloud vendors are increasing, so even if there are limited options today, there might be more options available in a near future.

The latter alternatives might be interesting for those organisations that are very careful with their data or for security reasons, or other concerns, can not share any data at all. In any case the ”Security & Data Protection” capability of your AI Ready initiative should hold the required guidelines, documentation, best-practices and know-how to make your organisations various projects and initiatives able to safely navigate with their data in the world of AI, instead of every initiative having to find and evaluate this information on their own.